The Future of AI: Why Scaling Laws Are Reaching Their Limits

February 15, 2026The Future of AI: Why Scaling Laws Are Reaching Their Limits

By Adeline Bowers

Introduction: The Data Crisis in AI

For years, the AI industry has been driven by one simple principle: bigger is better. More parameters, more data, more compute. But we’re hitting a fundamental wall. As recent research shows, we’ve essentially exhausted the internet for training data.

The implications are profound. If we can’t scale data indefinitely, how do we continue improving AI systems? This article explores the three critical problems facing large language models today—and the emerging solutions that might save the industry.

Problem 1: The Data Exhaustion

Research by Pablo Vobos and colleagues demonstrates that there’s a much slower growth in human-made internet data than what language models have been consuming. This creates an upper bound on dataset size—and consequently, on useful compute.

As Ilia Sotsskova emphasized in her keynote at Europe’s 2024 conference, this isn’t just a technical problem. It’s a fundamental constraint on AI progress. When you can’t get more high-quality training data, your models hit a ceiling.

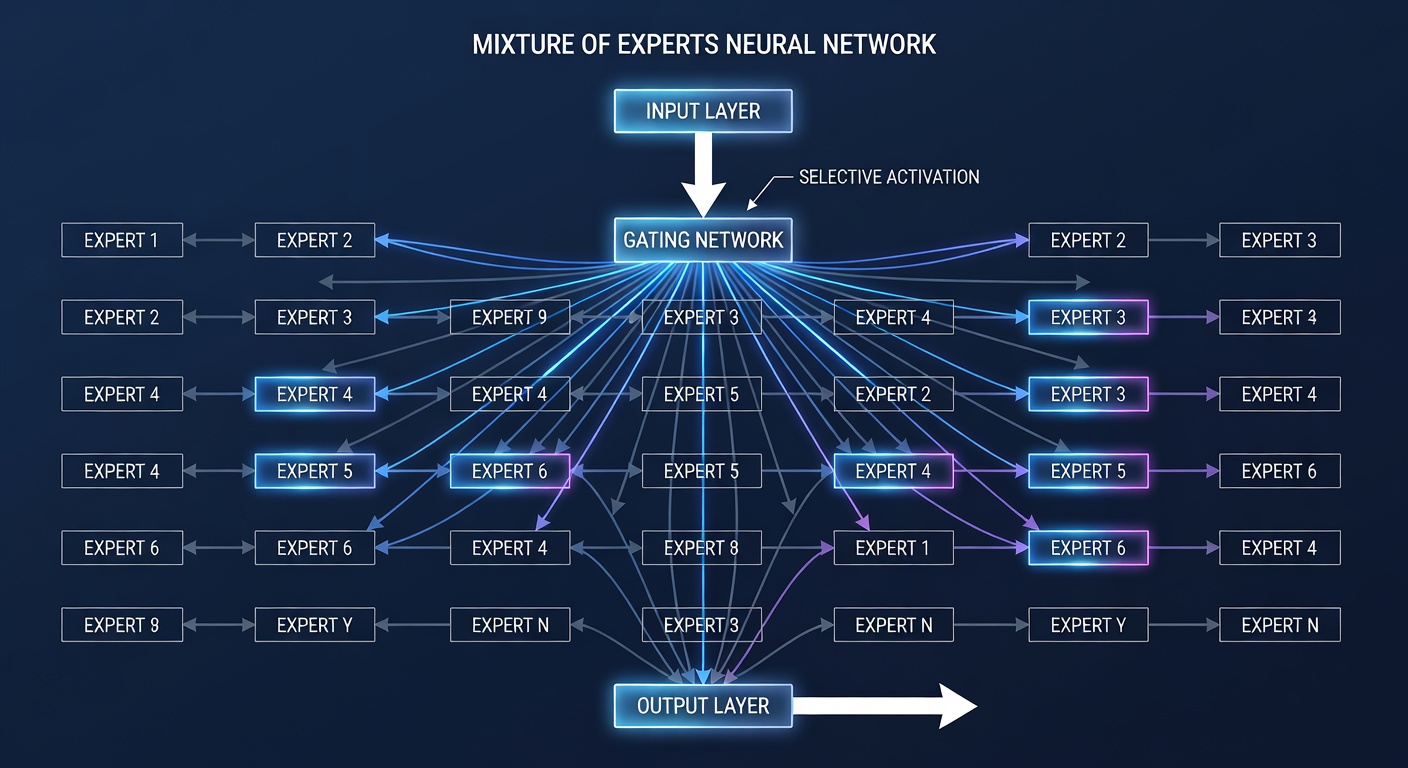

The solution? We’ve already seen mixture of experts (MoE) being used effectively. With MoE, scaling model size doesn’t necessarily increase compute—provided you have more data to compensate.

Problem 2: The Reasoning Model Challenge

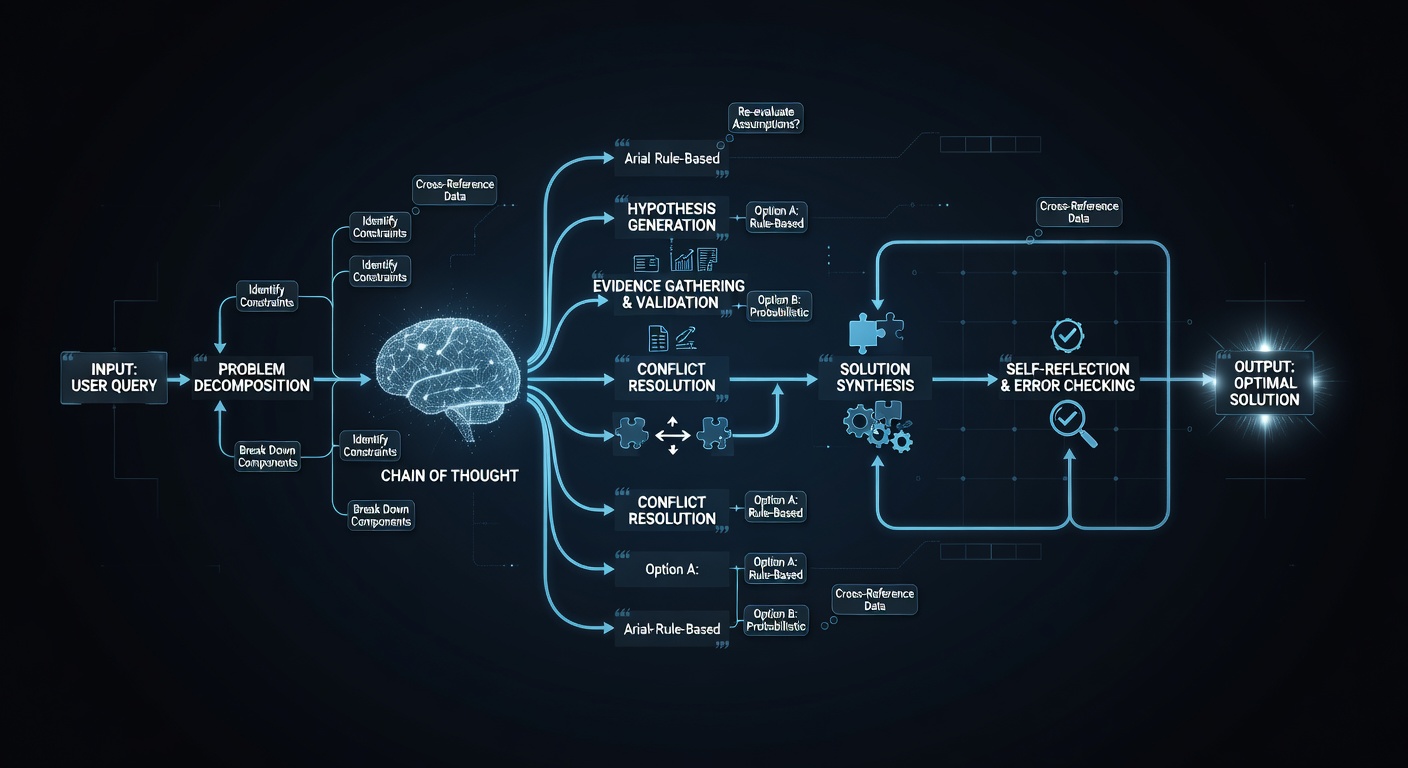

If you thought of reasoning models, you’re on the right track. But reasoning brings its own set of problems:

Extended Context Requirements

When you ask a reasoning model to solve complex problems—say, a math equation—it needs to “think” through multiple steps. This forces models to extend their context windows. And the more context, the greater the risk of forgetting critical information.

Anyone who’s had multi-turn conversations with AI spanning days or weeks knows this problem well. You watch them hallucinate and forget basic information. It’s frustrating, and it limits what we can expect from reasoning models.

Base Model Ceiling

Here’s the uncomfortable truth: your reasoning process is capped by your base model’s capability. Research shows that no matter how much reinforcement learning you apply, you can’t exceed what was already buried in your pre-trained model.

As one analysis put it, “reinforcement learning doesn’t teach your model anything new. It just amplifies pre-existing knowledge that might be buried very deep within your model.”

Problem 3: The Vocabulary Limitation

Here’s an unexpected problem: models operate on vocabulary. This creates challenges for multilingual and cross-cultural applications.

Consider Persian culture, where there’s an overly polite ritual of give-and-take. In Persian, there’s a dedicated word for this social custom—with no direct equivalent in English or other languages. When AI processes languages with richer social conventions, something gets lost in translation.

This isn’t just about translation. It’s about how models represent knowledge across different cultures and languages. The vocabulary constraint limits AI’s ability to understand nuanced human interactions.

The Path Forward

So what’s the solution? Several approaches are emerging:

- Synthetic Data: Using AI-generated data to supplement human-created content

- Reasoning Efficiencies: New architectures that extract more reasoning from smaller models

- Multimodal Learning: Moving beyond text to images, audio, and other data sources

- Specialized Fine-Tuning: Focusing on specific domains rather than general-purpose models

The era of simply making models bigger is ending. The future belongs to those who can do more with less—and find creative ways around the fundamental limits we’ve discovered.

Conclusion

The AI industry stands at an inflection point. For years, we’ve been able to throw more data, more parameters, and more compute at our problems. That era is over.

But this isn’t a crisis—it’s an evolution. The next generation of AI breakthroughs will come from smarter architectures, better data utilization, and innovative approaches to reasoning. The limits we’ve discovered aren’t the end of AI progress. They’re the beginning of a more sophisticated era.

As we move forward, the question isn’t “how big can we go?” but “how smart can we get?” And that might be a more interesting question anyway.

Adeline Bowers is a Senior Cloud Architect at Google Cloud Platform with expertise in machine learning infrastructure and scalable systems. She writes about emerging technologies and their impact on society.